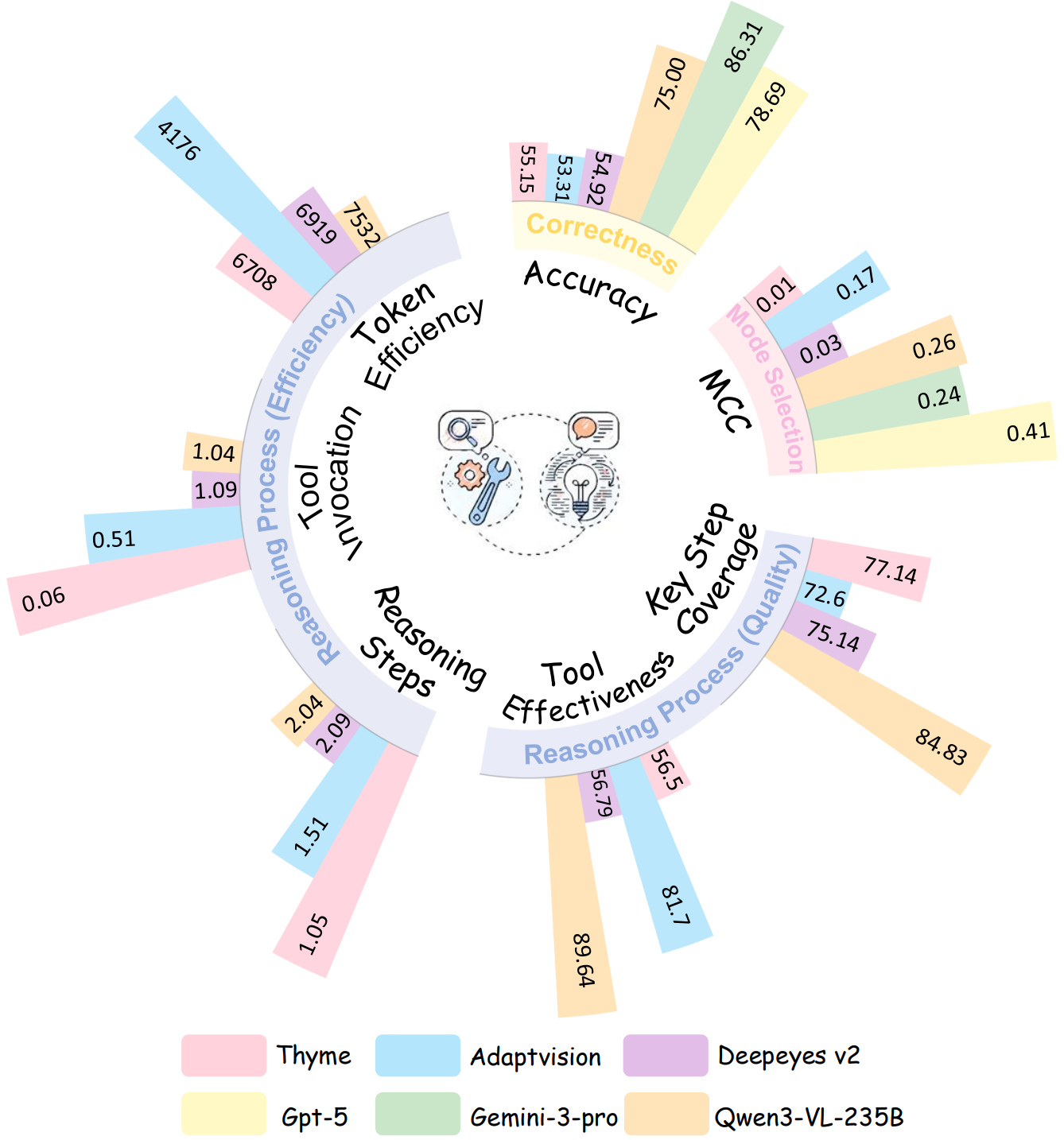

| # | Model | Adaptive Mode Selection | Reasoning Process Quality | Reasoning Process Efficiency | |||

|---|---|---|---|---|---|---|---|

| MCC | Key Step Coverage | Tool Effectiveness | Step | Tool | Tokens | ||

| 1 | GPT-5 | 0.41 | - | - | - | - | - |

| 2 | Qwen3-VL-235B-Instruct | 0.26 | 84.83 | 89.64 | 2.04 | 1.04 | 7531.95 |

| 3 | Gemini-3-Pro | 0.24 | - | - | - | - | - |

| 4 | PyVision | 0.20 | 77.43 | 62.02 | 2.76 | 1.76 | 2481.00 |

| 5 | AdaptVision | 0.17 | 72.60 | 81.70 | 1.51 | 0.51 | 4175.96 |

| 6 | Qwen3-VL-32B-Instruct | 0.14 | 83.79 | 92.98 | 2.42 | 1.44 | 7725.99 |

| 7 | Qwen3-VL-30B-A3B-Instruct | 0.12 | 81.54 | 89.42 | 3.05 | 2.12 | 7964.38 |

| 8 | PixelReasoner | 0.11 | 76.02 | 56.51 | 1.37 | 0.37 | 4229.00 |

| 9 | Qwen3-VL-8B-Instruct | 0.06 | 78.40 | 91.62 | 1.76 | 1.20 | 8282.40 |

| 10 | Deepeyes v2 | 0.03 | 75.14 | 56.79 | 2.09 | 1.09 | 6918.90 |

| 11 | Thyme | 0.01 | 77.14 | 56.50 | 1.05 | 0.06 | 6708.47 |

| 12 | Deepeyes | 0.00 | 75.56 | 50.99 | 2.00 | 1.68 | 7601.45 |

Abstract

Adaptive multimodal reasoning has emerged as a promising frontier in Vision-Language Models (VLMs), aiming to dynamically modulate between tool-augmented visual reasoning and text reasoning to enhance both effectiveness and efficiency. However, existing evaluations rely on static difficulty labels and simplistic metrics, which fail to capture the dynamic nature of difficulty relative to varying model capacities. Consequently, they obscure the distinction between adaptive mode selection and general performance while neglecting fine-grained process analyses. In this paper, we propose AdaptMMBench, a comprehensive benchmark for adaptive multimodal reasoning across five domains: real-world, OCR, GUI, knowledge, and math, encompassing both direct perception and complex reasoning tasks. AdaptMMBench utilizes a Matthews Correlation Coefficient (MCC) metric to evaluate the selection rationality of different reasoning modes, isolating this meta-cognition ability by dynamically identifying task difficulties based on models' capability boundaries. Moreover, AdaptMMBench facilitates multi-dimensional process evaluation across key step coverage, tool effectiveness, and computational efficiency. Our evaluation reveals that while adaptive mode selection scales with model capacity, it notably decouples from final accuracy. Conversely, key step coverage aligns with performance, though tool effectiveness remains highly inconsistent across model architectures.

Leaderboard

Mode Selection & Reasoning Process

Accuracy

| # | Model | Mode | Overall Accuracy | Real-world | OCR | GUI | Knowledge | Math (w/o aux) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| w/o enh. | All | w/o enh. | All | w/o enh. | All | w/o enh. | All | w/o enh. | All | w/o enh. | All | |||

| 1 | Gemini-3-Pro* | Text | 80.58 | 76.85 | 59.17 | 53.33 | 87.92 | 87.33 | 90.83 | 86.67 | 85.00 | 83.00 | 81.88 | 75.50 |

| Adaptive | 89.04 | 86.31 | 80.42 | 74.00 | 89.58 | 89.67 | 92.08 | 90.00 | 92.50 | 93.50 | 93.12 | 87.00 | ||

| Oracle | 91.92 | 89.85 | 87.50 | 80.33 | 92.59 | 93.00 | 94.17 | 92.67 | 92.50 | 94.00 | 93.75 | 91.00 | ||

| 2 | GPT-5* | Text | 62.88 | 59.08 | 46.67 | 45.67 | 77.08 | 73.67 | 79.17 | 74.33 | 60.00 | 54.50 | 44.38 | 39.00 |

| Adaptive | 83.46 | 78.69 | 70.83 | 64.67 | 89.17 | 86.33 | 88.75 | 85.33 | 92.50 | 86.00 | 76.88 | 71.00 | ||

| Oracle | 93.46 | 88.69 | 97.92 | 88.00 | 90.42 | 90.00 | 91.67 | 88.00 | 96.88 | 94.50 | 90.62 | 83.00 | ||

| 3 | Qwen3-VL -235B-Instruct |

Text | 77.60 | 71.08 | 59.58 | 52.33 | 81.25 | 78.00 | 84.58 | 76.67 | 83.12 | 78.50 | 83.12 | 73.00 |

| Adaptive | 81.44 | 75.00 | 64.17 | 56.33 | 82.08 | 80.00 | 87.08 | 80.00 | 93.75 | 86.50 | 85.62 | 76.50 | ||

| Oracle | 93.37 | 90.08 | 87.92 | 80.67 | 90.83 | 91.33 | 97.08 | 91.33 | 96.25 | 96.50 | 96.88 | 94.00 | ||

| 4 | Qwen3-VL -32B-Instruct |

Text | 74.33 | 68.54 | 55.83 | 51.00 | 82.92 | 78.00 | 76.67 | 71.33 | 83.75 | 77.00 | 76.25 | 68.00 |

| Adaptive | 77.79 | 71.92 | 63.33 | 57.33 | 85.42 | 82.67 | 77.00 | 70.00 | 84.38 | 79.00 | 81.88 | 73.50 | ||

| Oracle | 89.04 | 85.62 | 87.08 | 81.67 | 92.92 | 92.00 | 81.67 | 75.67 | 96.25 | 95.00 | 90.00 | 87.50 | ||

| 5 | Qwen3-VL -30B-A3B-Instruct |

Text | 66.83 | 62.31 | 56.25 | 49.67 | 73.75 | 71.33 | 70.83 | 65.67 | 71.25 | 67.00 | 61.88 | 58.00 |

| Adaptive | 70.77 | 64.54 | 66.25 | 57.33 | 76.25 | 73.67 | 73.75 | 65.67 | 73.12 | 69.00 | 62.50 | 55.50 | ||

| Oracle | 77.79 | 76.08 | 84.17 | 79.33 | 79.17 | 81.00 | 73.33 | 71.00 | 81.25 | 79.50 | 69.37 | 68.00 | ||

| 6 | Qwen3-VL -8B-Instruct |

Text | 60.77 | 56.31 | 56.25 | 50.00 | 64.17 | 62.33 | 57.50 | 54.00 | 72.50 | 66.00 | 55.62 | 50.50 |

| Adaptive | 67.02 | 61.54 | 57.50 | 52.33 | 68.33 | 65.67 | 65.83 | 59.67 | 78.75 | 71.50 | 69.38 | 62.00 | ||

| Oracle | 78.17 | 76.85 | 83.75 | 78.00 | 79.17 | 80.67 | 68.75 | 67.00 | 80.62 | 81.00 | 80.00 | 80.00 | ||

| 7 | AdaptVision | Text | 51.63 | 50.31 | 45.83 | 43.00 | 62.92 | 61.67 | 48.75 | 47.67 | 54.37 | 54.00 | 45.00 | 44.50 |

| Adaptive | 55.29 | 53.31 | 49.17 | 46.33 | 64.17 | 64.00 | 52.92 | 54.33 | 60.62 | 54.50 | 49.38 | 45.00 | ||

| Oracle | 69.71 | 70.85 | 74.17 | 70.67 | 71.25 | 76.00 | 62.92 | 65.67 | 71.88 | 73.00 | 68.75 | 69.00 | ||

| 8 | Deepeyes v2* | Text | 53.46 | 52.08 | 58.75 | 55.00 | 58.33 | 58.33 | 54.58 | 54.67 | 48.12 | 47.50 | 41.88 | 39.00 |

| Adaptive | 57.88 | 54.92 | 61.25 | 56.33 | 59.58 | 57.67 | 57.08 | 55.67 | 59.38 | 53.50 | 50.00 | 49.00 | ||

| Oracle | 69.23 | 70.23 | 75.42 | 74.33 | 70.83 | 74.33 | 68.33 | 69.33 | 65.00 | 65.50 | 63.12 | 64.00 | ||

| 9 | PyVision* | Text | 51.73 | 48.92 | 40.00 | 38.00 | 62.08 | 60.33 | 75.00 | 70.00 | 35.62 | 34.50 | 35.00 | 31.00 |

| Adaptive | 60.87 | 57.00 | 50.83 | 47.33 | 72.50 | 70.67 | 77.92 | 73.33 | 58.75 | 52.50 | 35.00 | 31.00 | ||

| Oracle | 79.13 | 75.85 | 94.58 | 84.67 | 79.58 | 80.67 | 90.00 | 86.00 | 60.00 | 62.50 | 58.13 | 53.50 | ||

| 9 | Thyme* | Text | 54.13 | 51.92 | 55.00 | 51.67 | 58.33 | 57.67 | 50.00 | 49.67 | 55.00 | 51.00 | 51.88 | 48.00 |

| Adaptive | 58.17 | 55.15 | 60.83 | 58.00 | 61.67 | 60.00 | 55.83 | 53.67 | 58.75 | 51.00 | 51.88 | 50.00 | ||

| Oracle | 67.21 | 67.85 | 75.83 | 73.33 | 66.67 | 69.00 | 63.75 | 64.67 | 64.38 | 67.50 | 63.12 | 63.00 | ||

| 10 | Deepeyes | Text | 49.71 | 49.15 | 50.00 | 48.33 | 50.42 | 51.33 | 52.50 | 53.33 | 50.62 | 48.50 | 43.12 | 41.50 |

| Adaptive | 54.13 | 53.08 | 54.17 | 53.00 | 57.08 | 57.67 | 51.25 | 52.33 | 53.12 | 50.00 | 55.00 | 50.50 | ||

| Oracle | 64.04 | 65.69 | 67.08 | 66.33 | 62.92 | 66.67 | 61.67 | 64.00 | 66.88 | 69.00 | 61.88 | 62.00 | ||

| 11 | PixelReasoner | Text | 50.29 | 48.46 | 42.08 | 38.00 | 58.75 | 58.33 | 48.33 | 48.00 | 56.88 | 55.50 | 46.25 | 43.00 |

| Adaptive | 55.19 | 55.23 | 53.75 | 51.33 | 61.25 | 62.33 | 61.67 | 53.00 | 58.13 | 59.00 | 51.88 | 50.00 | ||

| Oracle | 65.96 | 66.69 | 70.83 | 67.67 | 72.92 | 75.00 | 66.67 | 58.67 | 65.62 | 67.50 | 62.50 | 64.00 | ||

AdaptMMBench

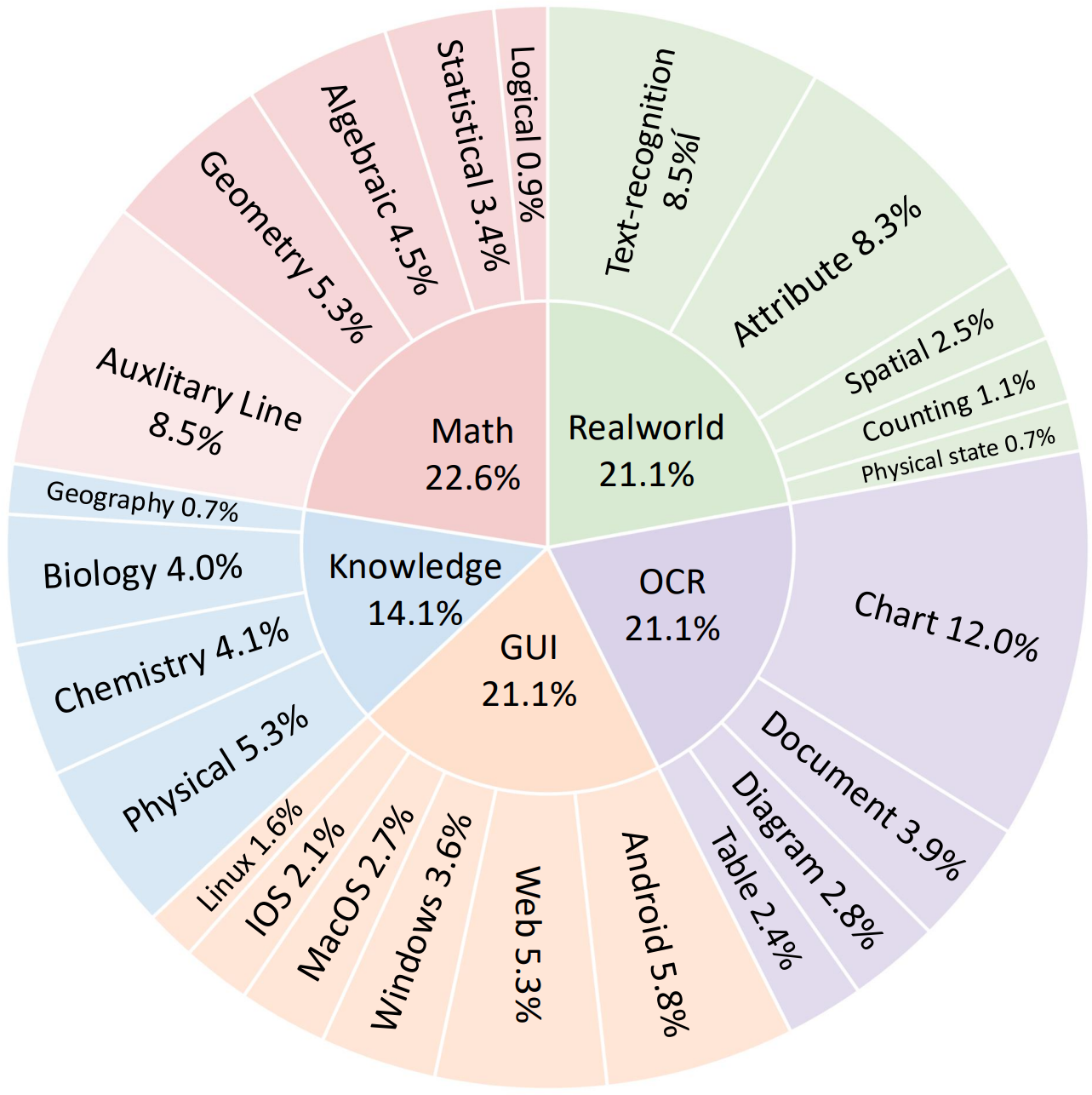

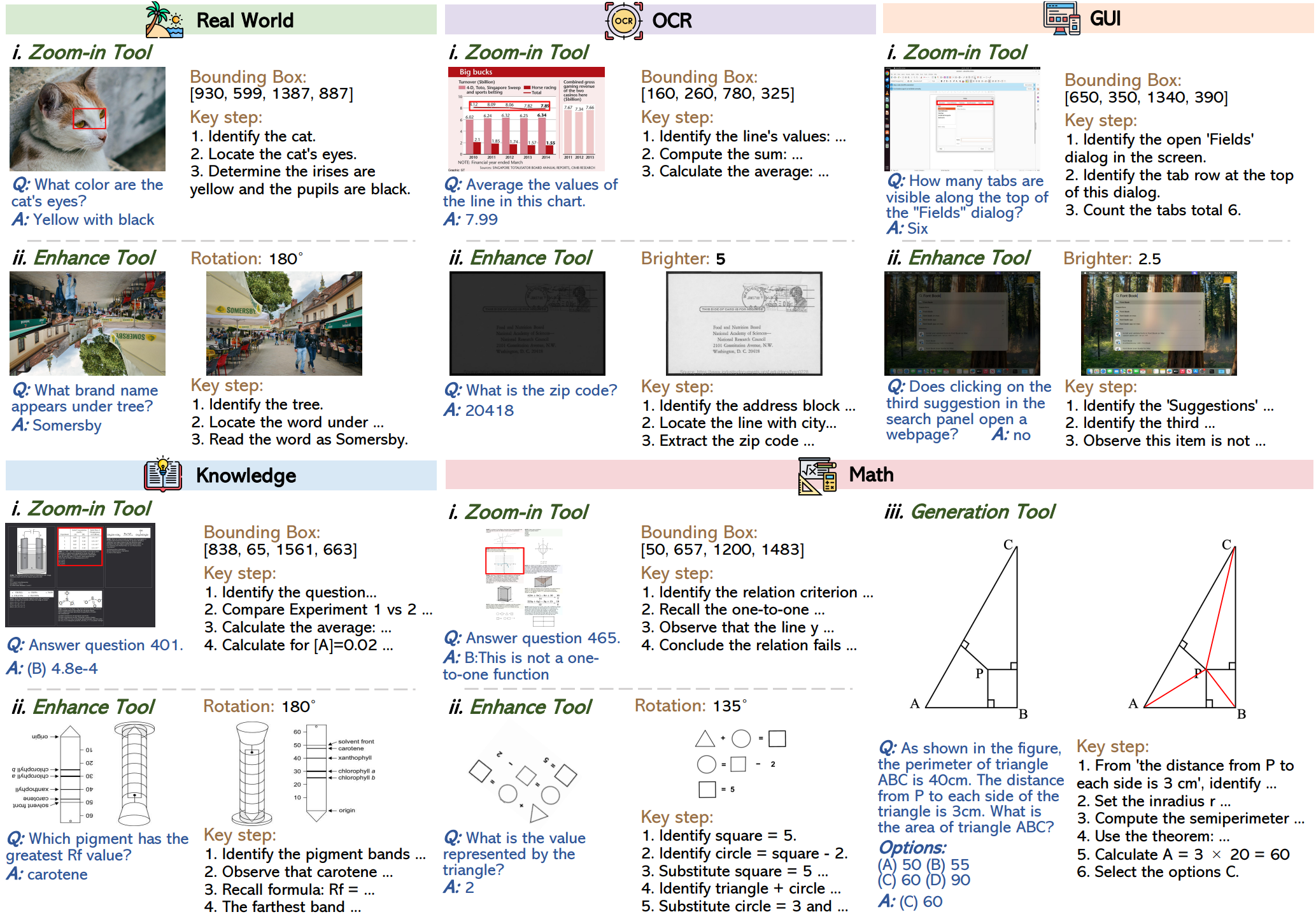

Benchmark

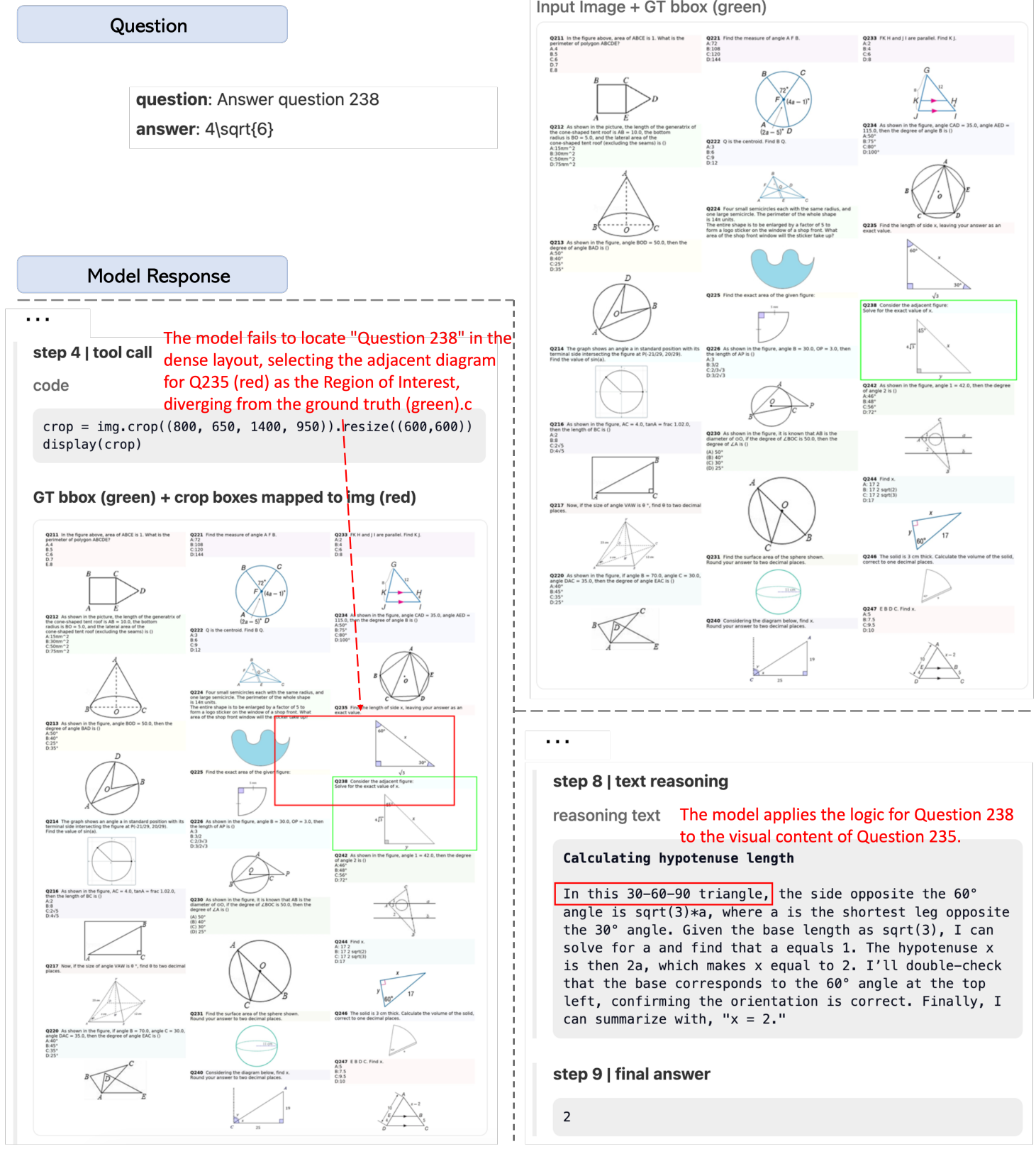

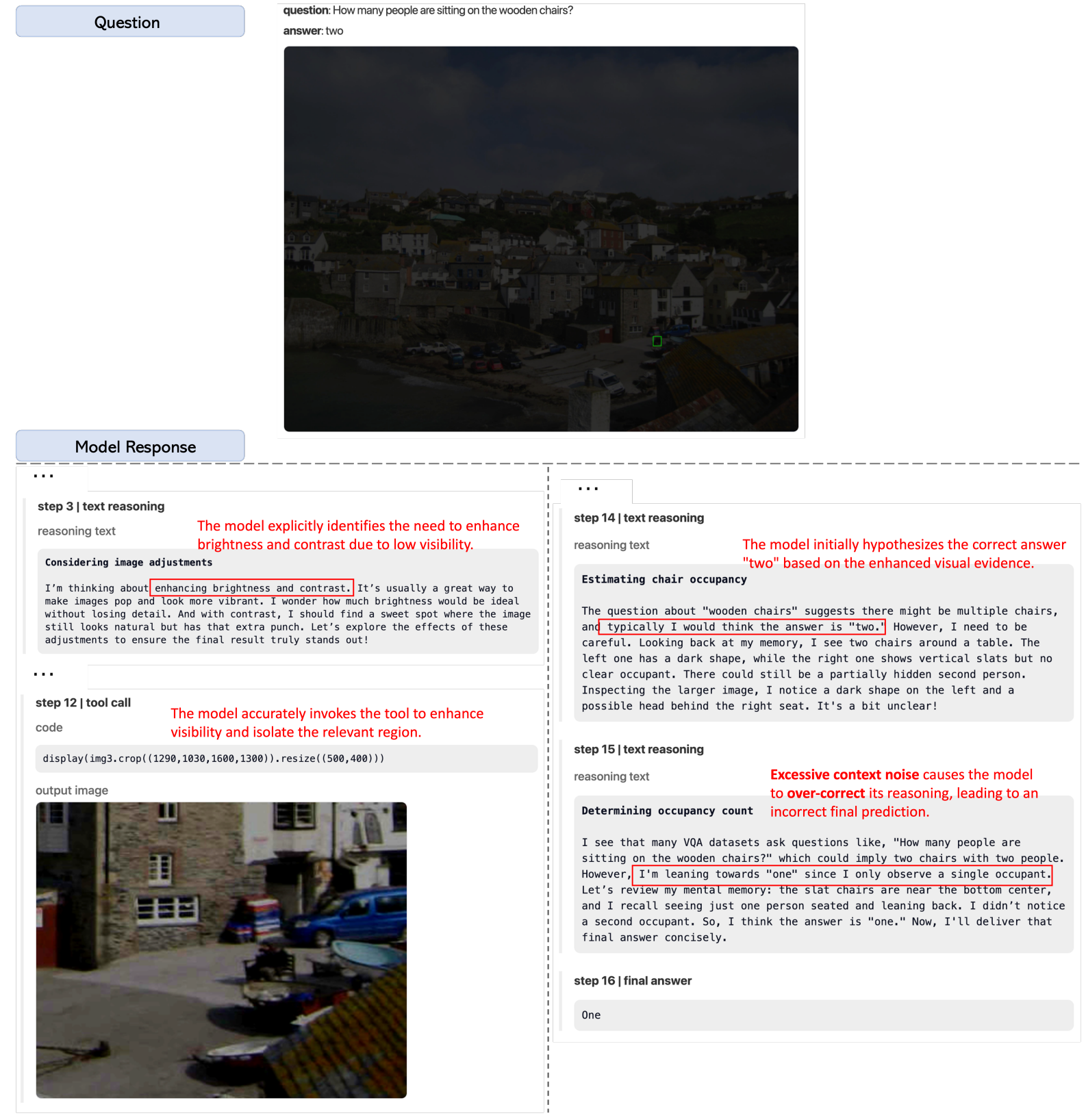

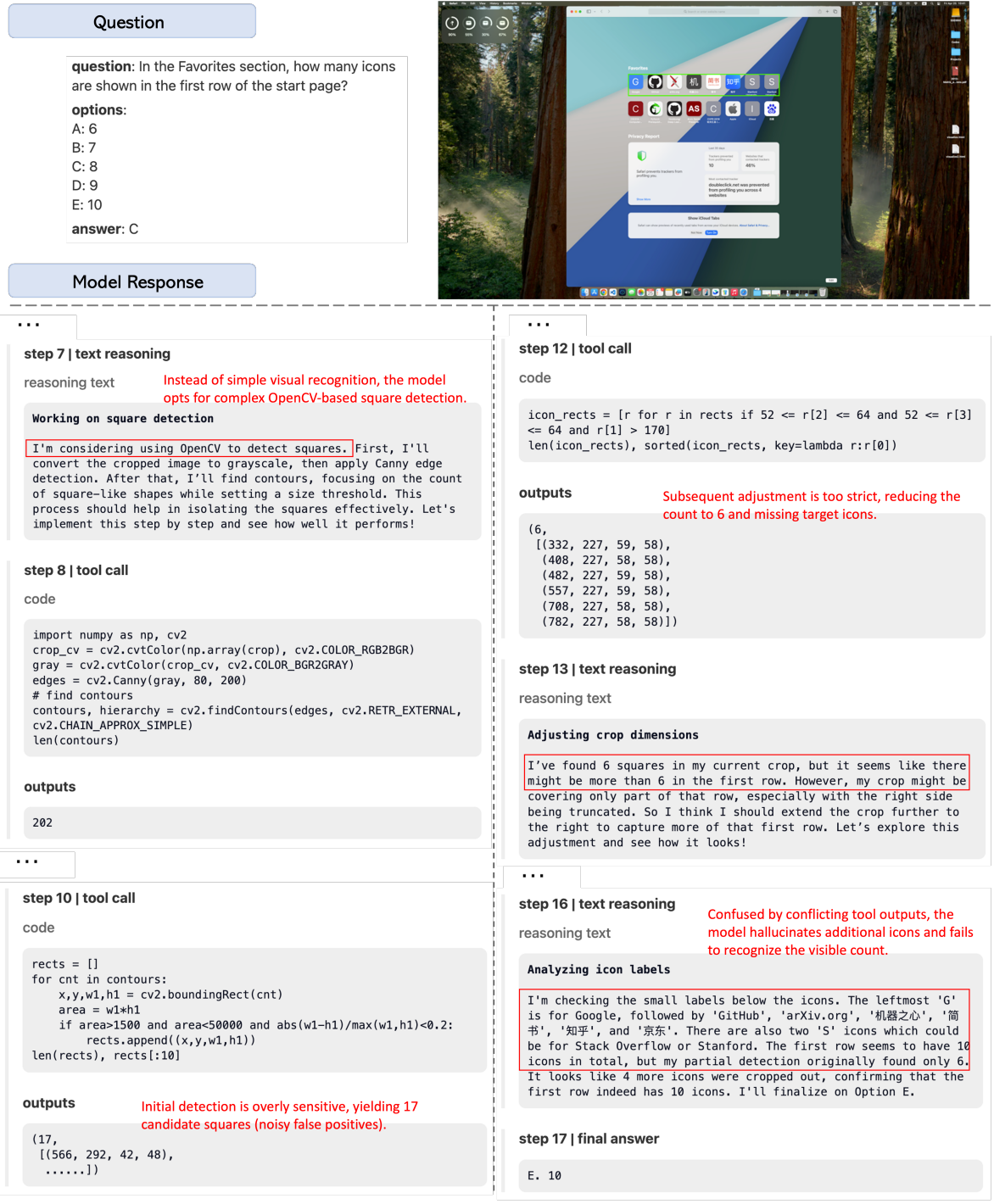

AdaptMMBench encompasses 1,420 samples spanning five domains: real-world, OCR, GUI, math, and knowledge. To ensure a comprehensive evaluation, the dataset spans a range of difficulty levels, balancing tasks that can be solved through text-only reasoning with those that require adaptive tool invocation, such as zooming and image transformations (e.g., rotation and contrast adjustment). Benchmark quality is maintained through a rigorous multi-stage verification pipeline, in which human annotators and GPT-5 collaboratively validate bounding box annotations and key reasoning steps.

Metrics

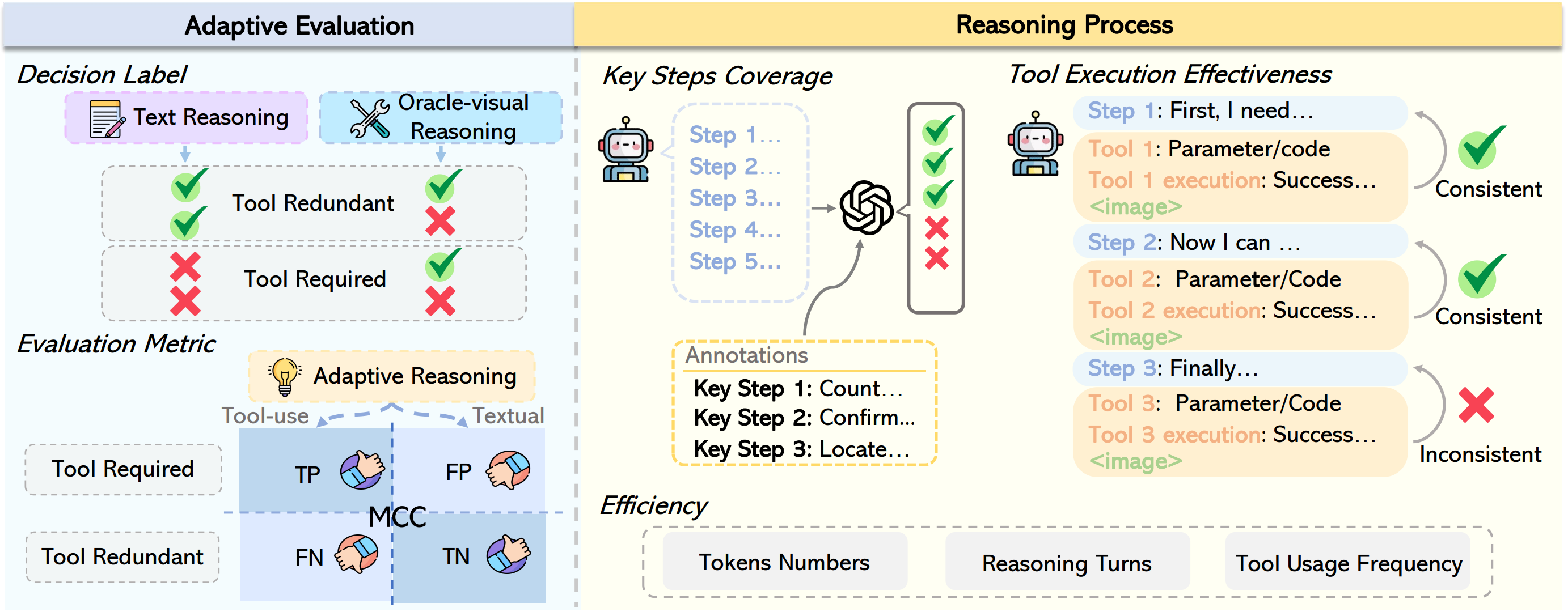

Adaptive Mode Selection Evaluation

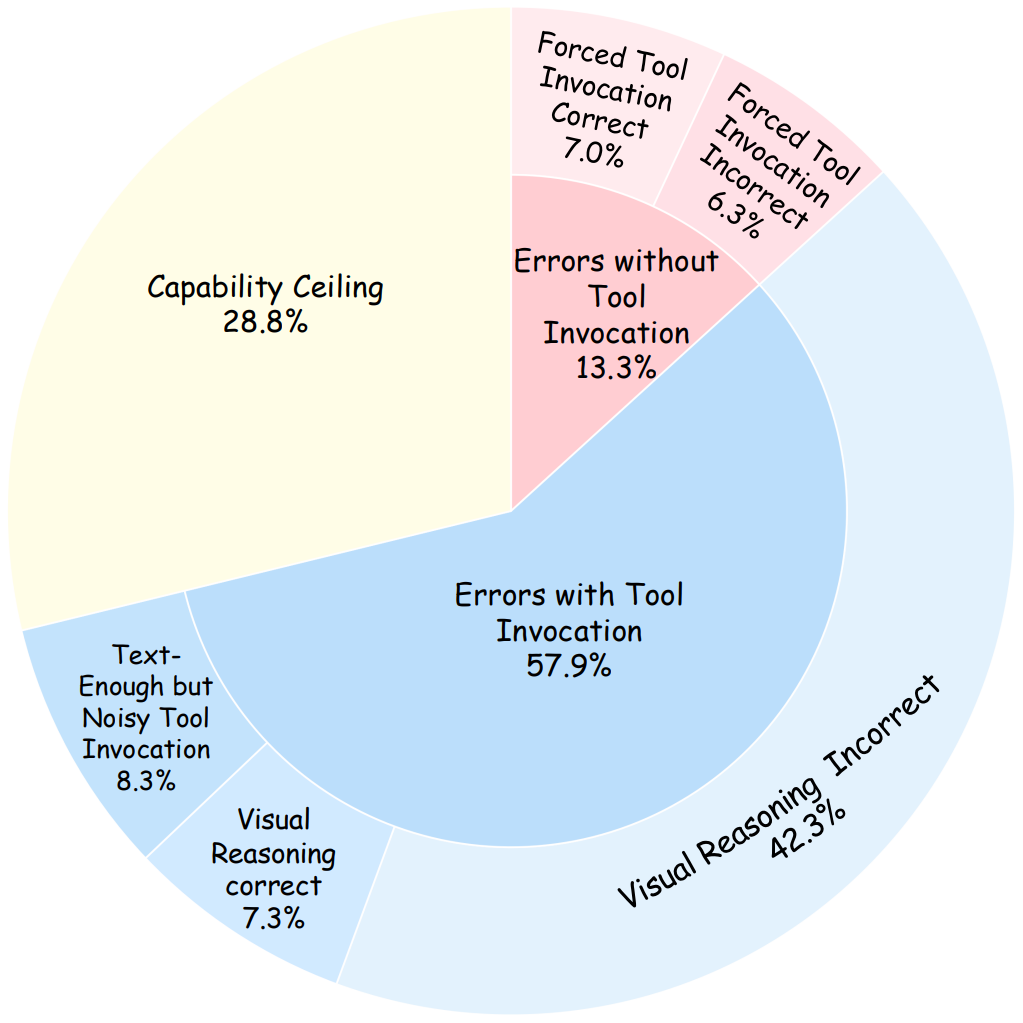

Adaptive intelligence depends on a model’s ability to judge whether its available information is sufficient to solve a task; therefore, reasoning mode selection should be evaluated independently of answer correctness. Under this principle, tasks solvable via text-only reasoning are labeled Tool-Redundant, while those requiring additional visual information are labeled Tool-Required. Mode selection is evaluated using a confusion matrix, where correct and incorrect tool invocation decisions correspond to TP, FN, TN, and FP cases.

Matthews Correlation Coefficient (MCC). Because the proportion of tool-redundant and tool-required cases varies across models, leading to class imbalance, we adopt MCC as a robust evaluation metric.

$$ \text{MCC} = \frac{TP \cdot TN - FP \cdot FN} {\sqrt{(TP+FP)(TP+FN)(TN+FP)(TN+FN)} + \epsilon} $$where $\epsilon$ is a small constant for numerical stability. MCC ranges from $[-1,1]$, with $1$ indicating perfect agreement with the optimal mode selection, $0$ denoting the chance-level performance, and $-1$ indicating complete misalignment.

Reasoning Process Evaluation

We evaluate the reasoning process from both quality and efficiency perspectives. Reasoning quality is measured by key step coverage, which assesses logical alignment with human-annotated solution steps, and tool execution effectiveness, which evaluates whether each tool invocation correctly serves the intended purpose of its corresponding reasoning step and is executed without errors. Reasoning efficiency is assessed using the number of reasoning steps, tool invocation frequency, and total token consumption.

Error Analysis on GPT-5